Artificial intelligence is rapidly becoming embedded in mission-critical operations across finance, healthcare, retail, and government. But while adoption accelerates, compliance maturity is struggling to keep pace. According to The Legal Professional’s Guide to AI Compliance, 68% of AI-implementing organizations experienced at least one compliance incident, costing an average of $1.2 million in remediation expenses . Even more striking: 73% of legal departments report they are unprepared for AI-specific regulations.

Legal teams now sit at the center of a new frontier, where governance, risk, and technology intersect. This article distills practical frameworks, real-world case studies, and regulatory insights from the guide, helping legal professionals build compliance programs that are not only defensible but strategically advantageous.

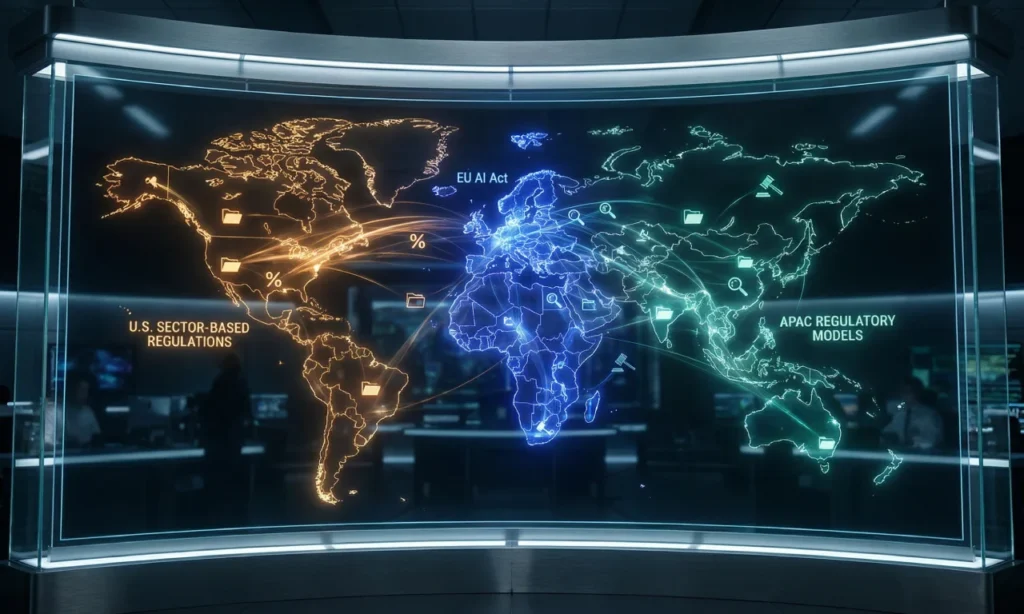

1. Understanding Today’s Global AI Regulatory Landscape

The regulatory landscape is no longer theoretical. It is here, and evolving rapidly.

EU AI Act: The First Comprehensive AI Law

The EU AI Act introduces a risk-tiered regulatory model, with strict requirements around documentation, transparency, data governance, human oversight, and conformity assessments for high-risk systems .

United States: A Sector-Based Approach

In the U.S., regulatory oversight comes through:

- FDA for medical AI

- FTC for deceptive or unfair AI practices

- State-level AI governance laws, such as NYC’s AEDT audit rules

Asia-Pacific: Rapid, Divergent Approaches

From Singapore’s governance frameworks to China’s algorithmic regulations and Japan’s human-centric principles, the APAC region is shaping global expectations.

As the guide emphasizes, more than 30 new AI regulations were proposed globally in the past year, making modular, jurisdiction-aware policies essential for legal teams .

2. Implementing a Risk-Based AI Compliance Framework

A risk-based approach is the backbone of modern AI governance, and one of the guide’s core themes.

The Four-Step Compliance Framework

From Page 6 of the guide, the recommended model includes:

- System Inventory & Classification

Catalog all AI systems and classify them according to risk level, data types, and regulatory exposure. - Risk Identification

Analyze risks across fairness, transparency, data protection, human oversight, and security. - Impact Assessment

Evaluate legal, ethical, operational, and financial impacts. - Control Implementation

Apply technical, procedural, and governance controls aligned with the risk tier.

Integrating AI Risk into Legal Risk Registers

The guide recommends incorporating AI-specific risk items into the organization’s existing legal risk register, reviewed quarterly to account for regulatory and model changes .

This structured approach shifts compliance from a reactive function to a predictive and proactive discipline.

3. Explainability: The New Legal Standard for Defensibility

Opaque models were once tolerated; now they are a liability.

The guide outlines the four transparency levels legal teams must understand:

- Basic Disclosure (informing users AI is in use)

- Process Transparency (general explanation of how the model works)

- Outcome Explainability (why a specific decision was made)

- Technical Transparency (model documentation, data lineage, testing)

Explainability Tools Legal Teams Should Know

- LIME – Simplifies complex models for interpretation

- SHAP – Quantifies feature importance for individual predictions

- Counterfactuals – Explain “what would need to change” for a different outcome

Why It Matters

Explainability isn’t just a technical best-practice, it is legal evidence.

A healthcare company profiled in the guide accelerated its FDA approval by embedding explainability and documentation from the outset, illustrating the regulatory advantage of transparency-first design .

4. Building Adaptive AI Governance Frameworks

Chapter 14 of the guide outlines the four essential pillars of an AI compliance program:

- Governance Structure

- Policies & Procedures

- Controls & Monitoring

- Training & Awareness

Why Modular Policies Are Now Non-Negotiable

Regulations evolve fast. Static policies become outdated quickly.

The guide recommends modular policy architectures with:

- Universal AI principles (fairness, transparency, accountability)

- Risk-tiered requirements

- Use-case-specific guidelines (e.g., generative AI)

- Jurisdiction-specific regulatory modules

Cross-Functional AI Governance Boards

Effective AI oversight requires collaboration from:

- Legal & Compliance

- Data/AI Engineers

- Ethics Specialists

- Risk Managers

- Business Owners

Such governance bodies provide continuity, documentation, and defensible decision-making.

5. Preparing for Third-Party AI Audits

Third-party audits, especially under the EU AI Act, are becoming mandatory.

The guide recommends assembling a centralized evidence repository, including:

- Completed risk assessments

- Data governance documentation

- Model cards and technical performance evidence

- Drift monitoring logs

- Human oversight procedures

Mock audits—internal and external, are also recommended to expose documentation gaps before regulators do.

6. Training: The Often Overlooked Compliance Requirement

AI compliance is multidisciplinary. As the guide notes, organizations should implement:

- Foundational Training on AI & regulations

- Role-Based Modules for legal, technical, and business teams

- Case-study-driven learning

- Ongoing updates as regulations evolve

Training builds a shared language across departments and prevents compliance from becoming siloed.

7. Case Studies: What Strong AI Compliance Looks Like

The guide provides real-world examples that demonstrate how early compliance reduces risk and accelerates deployment.

Financial Services: Algorithmic Lending

- Tiered validation

- Fairness testing

- Explainability for adverse action notices

- Human review protocols

Result: 35% higher approvals, 40% lower disparate impact, zero findings during regulatory review.

Healthcare: Diagnostic AI

- Early FDA collaboration

- Strong data governance

- Clinical validation across population subsets

Result: Successful clearance and adoption with physician trust.

Retail: Customer Analytics

- Privacy-by-design architecture

- Modular system design

- Quarterly regulatory reviews

Result: A scalable, globally compliant analytics ecosystem.

Across all cases, one theme emerges: compliance accelerates innovation when integrated early.

Where AI Development Service Fits into Compliance-First Innovation

Organizations increasingly recognize that compliance cannot be bolted on at the end, it must be engineered into AI systems from day one. This is where a specialized AI Development Service becomes invaluable.

A compliance-aware AI development team can help organizations:

- Architect models with built-in explainability

- Embed fairness and bias testing pipelines

- Implement privacy-preserving data practices

- Document models in accordance with upcoming regulations

- Build monitoring systems for drift, bias, and anomalies

Instead of slowing innovation, compliance-driven AI development reduces rework, accelerates regulatory approval, and improves system trustworthiness.

Legal teams who partner early with an AI development provider gain visibility, control, and technical alignment across the entire model lifecycle.

Conclusion: AI Compliance Is Now a Strategic Advantage

As AI becomes foundational to competitive strategy, its legal and compliance implications grow exponentially. The Legal Professional’s Guide to AI Compliance makes one point abundantly clear: organizations that build forward-looking, risk-based, adaptable AI governance programs will outperform those that treat compliance as a checkbox.

Key takeaways for legal professionals include:

- The global regulatory landscape is expanding rapidly and requires modular governance.

- Risk-based frameworks ensure resources focus on the highest-impact systems.

- Explainability and documentation are now legal necessities, not technical preferences.

- Cross-functional governance builds defensible, future-ready oversight.

- Compliance, when integrated early, accelerates AI deployment and reduces risk.

Legal teams who embrace these frameworks will not only protect their organizations, but will also enable them to innovate with confidence. If you’re looking to build AI systems that align with regulatory expectations from the start, a dedicated AI Development Service can help you establish a compliant, scalable, and ethically sound AI foundation.